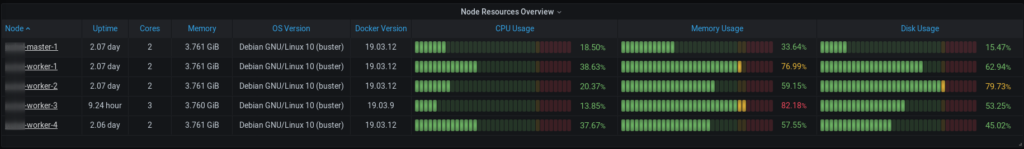

After I deployed several Java Docker containers on my self managed Kubernetes cluster I recognized that the containers consume much more memory as defined in the Kubernetes resource limits.

....

resources:

requests:

memory: "512Mi"

limits:

memory: "1Gi"

....The Containers run OpenJDK 11 so per default it should respect the container memory limits and not overrun them. Running the same container with plain docker on the same worker node the memory limits where resprected:

$ docker run -it --rm --name java-test -p 8080:8080 -e JAVA_OPTS='-XX:MaxRAMPercentage=75.0' -m=300M jboss/wildfly:20.0.1.Final

$ docker stats

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

515e549bc01f java-test 0.14% 219MiB / 300MiB 73.00% 906B / 0B 0B / 0B 43But starting same container with kubectl the memory limits were ignored

$ kubectl run java-test --image=jboss/wildfly:20.0.1.Final --limits='memory=300M' --env="JAVA_OPTS='-XX:MaxRAMPercentage=75.0'"

$ kubectl top pod java-wildfly-test

NAME CPU(cores) MEMORY(bytes)

java-wildfly-test 1089m 441Mi

After several days of research I finally found the root of this strange behaviour. In my environment kubelet and the Docker daemon used a different cgroupDriver!

How to Verify cgroupDriver

To verify if kubelet and docker are using the same cgroupDriver you can use the following commands:

$ sudo cat /var/lib/kubelet/config.yaml | grep cgroupDriver

cgroupDriver: systemd

$ sudo docker info | grep -i cgroup

Cgroup Driver: systemdIn this example both use systemd which is typical for Kubernetes since version 1.19.3

But if for example the kubelet shows no cgroupDriver entry you need to fix this.

How to Set cgroupDriver

To fix the cgroupDriver entry for kubelet just edit the file

/var/lib/kubelet/config.yamland search for the entry

cgroupDriver: systemdIf it is not set just add the entry into the config file.

Finally you need to restart kubelet

$ systemctl daemon-reload

$ systemctl restart kubeletThe Metrics Server

To get the correct metrics displayed with kubectl top you need to install the open source project metrics-server. This service provides a scalable, efficient source of container resource metrics like CPU, memory, disk and network. These are also referred to as the “Core” metrics. The Kubernetes Metrics Server is collecting and aggregating these core metrics in your cluster and is used by other Kubernetes add ons, such as the Horizontal Pod Autoscaler or the Kubernetes Dashboard.