Today, when we go into our beautiful Internet world, we have to admit that we have forgotten how the technology behind works. This is bad because it makes one of the most important inventions of modern times useless. Why is that? And what is the missing link?

The Beginning

In the beginning of the Internet and the World-Wide-Web (WWW) there was a problem to be solved. For universities, it was very difficult to organize the ever-increasing number of studies and publications in a way that this information could be found. How could a student be able to find out if there is an answer to his question in another university?

This was the moment as the Word-Wide-Web was invented by Tim Berners-Lee. The fact that the Internet already existed during this time and that university servers were connected to each other, it was obvious to use this technology also for publishing knowledge and not just for communication. After all universities published their publications on public self-hosted servers, it was possible to access the information from any point only by knowing the IP address or the name of the university. This was very simple and very efficient. And each university continued to have the control over its own information.

What Happens?

The idea was not limited to universities only and could be applied by any organization, any company and any individual with a public server. So everyone was now able to publish information. But what we did then was to publish information more and more only on a few centrally managed servers. At least most people today believe the internet consists only of this points of information. This severs are known as Facebook, Twitter or Tiktok. And so we have lost control, leading to all the unpleasant excesses that we see in society today.

What can you do about it? Very simple – look for answers to your questions from the person who knows it. not someone who may have found a part of the answer. Even if that is sometimes more time intensive.

We Did it Again!

Ok, that was the general part of my thinking. But since I am a software architect, I like to look at these things from the technical side. Even if we think we know the Internet technology, we are begin using it in the wrong way again.

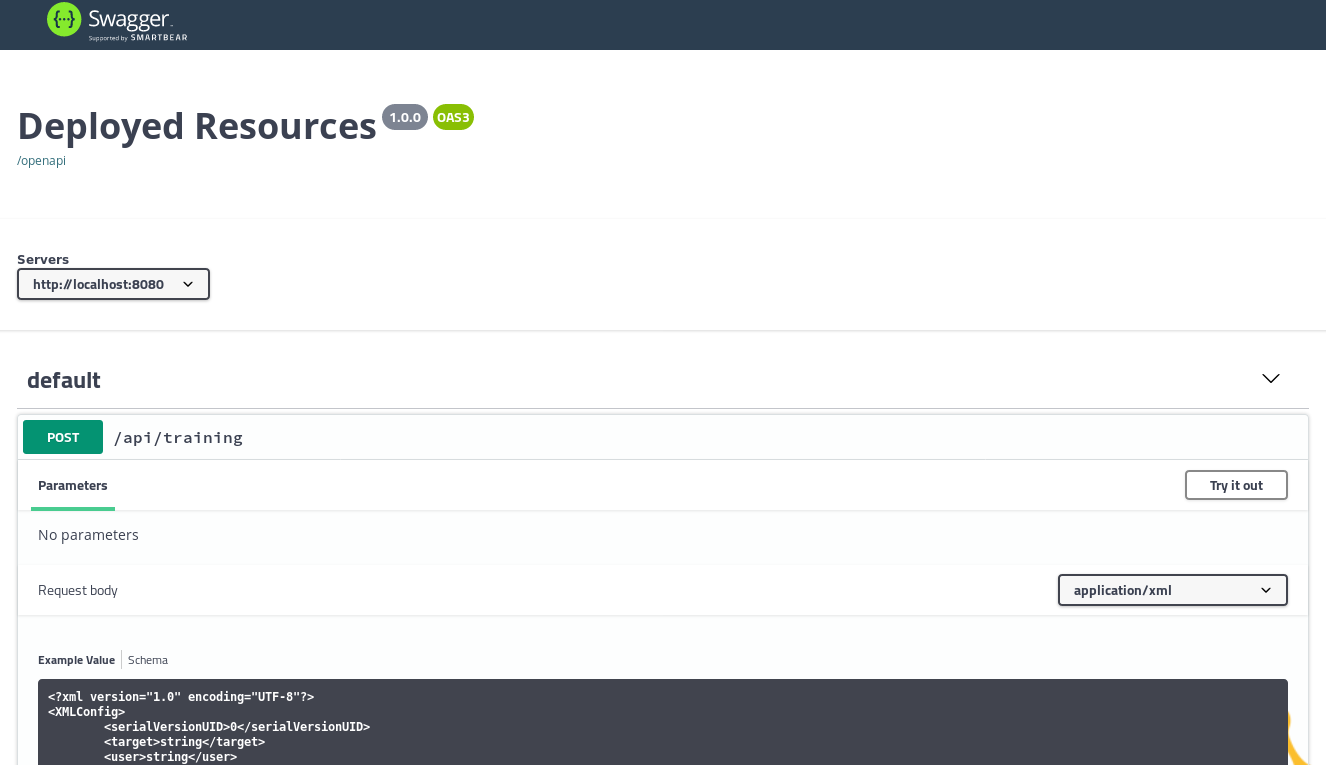

Microservices are the latest excesses of this development. The basic idea of the microservice is again comparable to Tim Berners-Lee’s invention. Manage different kind of Data on separate (micro)servers. Connect those servers with each other and you can gain more flexibility and faster solutions. James Lewis and Martin Fowler explain this idea in very detail in their definition of Microservices on martinFowler.com.

But the most upsetting thing is – just as we have reduced the WWW to a few social networks by concentrating the distribution of information, we are now starting to do the same with microservice technology. If you read current blogs about microservices, you’ll find that most posts recommend to run your services on only a view central platforms such as AWS, Azure or Google Cloud. This is absolutely terrifying.

I myself recently applied this concept of centralizing data access in one of my open source projects (Imixs-SAGA) and developed a central registry service. Although it may sometimes seem useful to centralize things to facilitate access or data management, we should always consider what the basics of a technology are. In the case of the Internet technology, this is the decentralization of services and the usage and publication of known access points. We should apply these basics also to our microservice solutions. Only in this way can we say that we understand the Internet.