JSF stands for Java-Server-Faces which is a web technology that is underestimated by many people. I wonder why is that? And are you actually crazy if you still use JSF? I don’t think so and I will share some of my thoughts. (To get an impression about the technology see the Getting Started Blog form Rudy De Busscher.)

The Specification

First of all JSF is a specification which is an important advantage over all the other technologies that JSF is usually compared to. The specification is an open process that is accompanied by many developers for years to define a general solid standard for web applications. This specification process has recently taken place in the Eclipse Foundation, which sets up rules that follow very high quality standards. This is one of the biggest advantages, as it guarantees that your web application is build on a solid core. Of course, other web frameworks also have large communities, but often these are represented by a single company that does not always take the developers into account. Angular from Google is just one example.

Server Based Framework

But back to JSF. Why is JSF sometimes ridiculed as an outdated technology? I personally started with JSF in its early beginning in 2001. So it’s obviously an old technology. And I am an old developer too ;-). In the meantime, many other web frameworks have evolved. Many of them are based on JavaScript and follow the single page paradigm (SPA) running in the browser. The idea is to move the application logic into the browser to get applications faster. This idea came up at a time when not everyone was satisfied with JSF and JavaScript took off. Java Server Faces – as the name implies – in contrast is a server based framework. This means the application logic is executed on the server. And this is where we have the big difference. At that time it was a valid argument to reduce the load on the servers. And of course, this should still be a desirable goal today. But the people who use this as an argument against JSF are often the same ones who rave about serverless functions. Therefore, I don’t think we should consider a server-based framework as a stupid idea today.

Self-Contained Microservice

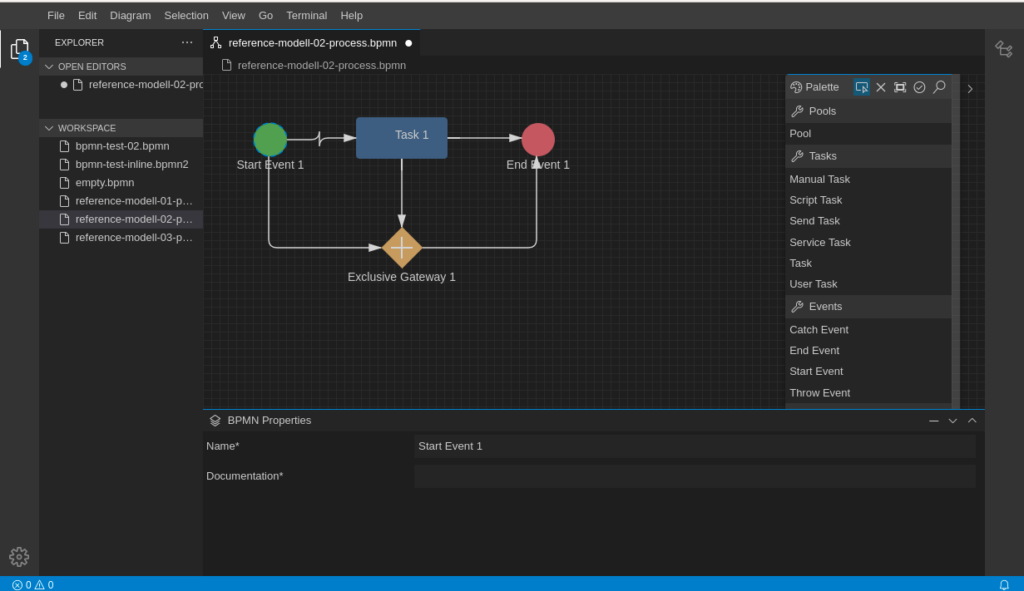

In fact, a server-based web framework offers some advantages. In this way, application logic and business logic can be easily coupled. This is achieved within Jakarta EE mainly through CDI, EJB and JPA. These technologies are the backend for JSF components. Jakarta EE provides a very clear understanding of the separation of layers and JSF fits seamlessly into this concept. The server-side implementation also hides application details from the client. In contrast in a JavaScript SPA, large parts of the application logic are usually unprotected in the browser which can be a security risk in some cases.

So rendering the application logic on the server side makes it more easy for developers responsible for both – the backend and the frontend part. And this leads to the fact that applications can be developed faster in many cases. From the point of view of the microservice architecture, a JSF application corresponds to the principle of the Self-Contained Services. This is a pattern which is commonly used in microservice architectures.

Simplicity

Since JSF is often referred to as complex and clumsy, I recently wondered if this is really true. I migrated one of my Vue.js SPA to JSF 4.0 and compared the complexity of the resulting code. So finally, I would like to show in an example the simplicity of JSF. (Note: I do not compare JavaScript code with Java here)

In JavaScript Frameworks you typically bind your HTML tags to some code by additional tagging.

<!-- Vue.js -->

<input type="text"

name="userwebsite"

v-model="userwebsite"

placeholder="enter your website">

Your Website is: <span>{{api}}</span>The SPA framework resolve the tag (v-model) and place the correct value from your model written in JavaScript. It also binds the input field to track changes of your model.

The JSF counter part looks not much different:

<!-- JSF -->

<h:inputText value="#{userController.website}"

pt:placeholder="enter your website" />

Your Website is: <h:outputText value="#{userController.website}" />Here you also bind your model values to input fields or output text. But the code is executed in Java on the server side which is often equal to your backend code written in Java also for SPAs.

Ajax is used by JavaScript Web frameworks out of the box. So you usually have no need to care about it. In the example above the span tag is automatically updated when the value of the input changes.

In JSF you use also Ajax, but you have more control about how it behaves. You can simple enclose a part of your page with a f:ajax tag to get the same behaviour:

<!-- JSF -->

<f:ajax>

<h:inputText value="#{userController.website}"

pt:placeholder="enter your website" />

Your Website is: <h:outputText value="#{userController.website}" />

<f:ajax>Another example is linking. In a JavaScript framework you use again a kind of tagging to initiate a rendering life-cycle when the user clicks on an element:

<!-- Vue.js -->

<li class="nav-item" v-on:click="showSection('search')">

<label>Search</label>

</li>The showSection implements some business logic in your JavaScript code and is responsible for handling data and changing the layout.

JSF is not much different:

<!-- JSF -->

<li class="nav-item">

<h:link outcome="search" >

<label>Search</label>

</li>The h:link tag loads a new page from the backend named ‘search.xhtml’ containing the new layout. All the model binding is handled behind the scene in the backend. For the user there is no different in behaviour.

So as you can see from the markup, there is not much difference and it is not more or less complex to write a JSF frontend as it is in JavaScript based Web frameworks.

Conclusion

My personal conclusion is that JSF gives me a clearer and more consistent concept for writing my code within a framework. Backend logic and application design are combined in one technology resulting in a pattern also known as self-contained microservice.

To me, this is a valid concept even for modern web applications. And computing application logic on the server side is not crazy at all.

With JSF Version 4.0 you will find a modern and well designed Web technology embedded into the latest Jakarta EE 10 framework.