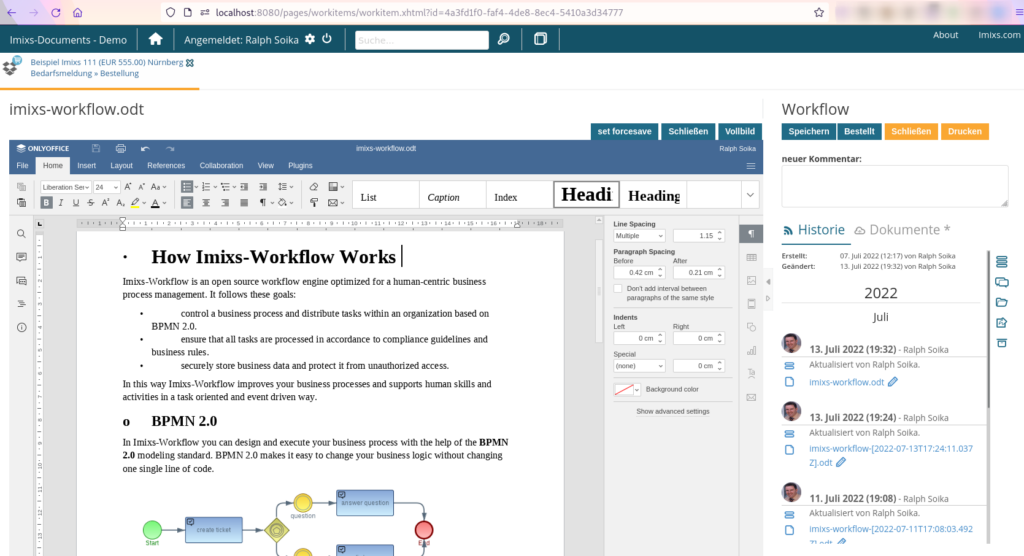

I am developing Java Enterprise applications now for more than 20 years. Security was always an important part in developing enterprise apps. And Jakarta EE (formally known as Java EE) has always provided a perfect platform to do this. But with the new Security API in Jakarta EE 10 – especially in conjunction with OIDC, I have had a lot of problems. On the one hand, this was due to the fact that this API has developed rapidly in recent years, and only with Jakarta 10 did a truly final concept emerge.

But at the same time, this concept breaks with some established rules in enterprise development. Many aspects in Jakarta EE are perfectly abstracted through the API specification. This means that, as a developer, I do not have to think about the integration of a database cluster or how to send out emails via an SMTP gateway. I just need to develop against the API specification and finally my application will run on all Jakarta EE compatible servers. The configuration of these side aspects, such as databases or messaging, can be configured during deployment – independent from my code. This means my code dose not know anything about a vendor specific SQL Server or the Mail Infrastructure in my company. This was also true for security. It was always declarative and not part of my code base. The only thing I need to provide was for example a login page when I wanted a form based login.

OIDC is Configured By Code

Now with the new Jakarta Security 3.0 the integration of security turned into a new direction. Now your are developing a security Bean if you need specific security solutions like OIDC. Server Platforms like Wildfly or Payara offer still out of the box solutions but in all cases this means you need some kind of proprietary deployment descriptor or bind vendor specific libraries. But this often may be no option as this will break the vendor interoperability. I struggled a lot with this concept and tried to keep the connection via OIDC as configurable as possible. But in the end, I had to admit that this is not possible. The underlying concepts may be too complex to be realized abstract and interoperable.

So if you plan to secure your application with OIDC you need at least one single bean describing you security provider.

@OpenIdAuthenticationMechanismDefinition( //

providerURI = "${oidcConfig.providerURI}", //

clientId = "${oidcConfig.clientId}", //

clientSecret = "${oidcConfig.clientSecret}", //

scope = { "openid", "profile", "email", "groups" },

claimsDefinition = @ClaimsDefinition(callerGroupsClaim = "groups", callerNameClaim = "loginname"), //

redirectURI = "${oidcConfig.redirectURI}")

public class SecurityConfig {As you can see in this example the bean does not have any methods and is just declaring one single annotation. So we can say this is our configuration. As you can see I am using a configuration bean instead of hard coding things like the client secret. This configuration bean is again using the Jakarata EE / Micropfofile API to allow a flexible configuration via config files or environment variables:

import java.io.Serializable;

import org.eclipse.microprofile.config.inject.ConfigProperty;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.inject.Inject;

import jakarta.inject.Named;

@ApplicationScoped

@Named

public class OidcConfig implements Serializable {

@Inject

@ConfigProperty(name = "OIDCCONFIG_PROVIDERURI", defaultValue = "http://localhost/")

String providerURI;

@Inject

@ConfigProperty(name = "OIDCCONFIG_CLIENTID", defaultValue = "undefined")

String clientId;

@Inject

@ConfigProperty(name = "OIDCCONFIG_CLIENTSECRET", defaultValue = "undefined")

String clientSecret;

@Inject

@ConfigProperty(name = "OIDCCONFIG_REDIRECTURI", defaultValue = "undefined")

String redirectURI;

public String getClientId() {

return clientId;

}

public String getClientSecret() {

return clientSecret;

}

public String getProviderURI() {

return providerURI;

}

public String getRedirectURI() {

return redirectURI;

}

}So at the end this all works fine and I can configure things like the clientID or the clientSecret at deploy time. But my point is that you cannot avoid using this kind of implementation.

As you also can see in my first example, it is using some additional parameters like the ‘scope‘ or the ‘claimsDefinition‘ that are mostly tightly coupled to the Open ID Provider you use. And this may break the interoperability of you code.

Working With OIDC Modules

At the end we need to accept that the Security API in Jakarta EE is what it is. Unfortunately, in the area of SSO and OIDC, there are many different providers that often impose very vendor specific configurations. Therefore, my recommendation here is to place the Security Bean for the OIDC connection always into a separate module (jar library). This allows you to implement different variants of the security bean. So as a result you project can look like this:

.

├── my-app

│ └── src

├── my-app-odic-keycloak

│ ├── pom.xml

│ ├── src

│ └── main

│ └── java

│ └── com

│ └── SecurityConfig.java

├── my-app-odic-auth0

│ ├── pom.xml

│ ├── src

│ └── main

│ └── java

│ └── com

│ └── SecurityConfig.java

......This allows you to implement vendor specific configurations if needed. And you can decide on build time which of your OIDC jars you link to your final deployment. This gives you more flexibility and your application code is still not bound to one single OIDC configuration. You can even fall back to the default Jakarta EE security modules if required.

Conclusion

Perhaps I am being a bit pedantic here. But as a Jakarta EE developer, one is used to developing strictly against an API. For a Spring project, my considerations may seem exaggerated. However, when it comes to developing truly interoperable applications, these considerations are definitely justified. I look forward to your feedback.

Update 25. June 2025

We have developed a new OIDC Module that allows an easy and platform independent integration of ODIC into modern Web Applications.

Find details about Imixs-Security-OIDC here.