In the following I will share my thoughts about how to setup a PostgreSQL Database in Kubernetes with some level of high availability. For that I will introduce three different architectural styles to do this. I do not make a recommendation here because, as always every solution has its pros and cons.

Continue reading “PostgreSQL HA & Kubernetes”Kubernetes – PersistentVolume: MountVolume.SetUp failed

During testing Ceph & Kubernetes in combination with the ceph-csi plugin in run into a problem with some of my deployments. For some reason the deployment of a POD failed with the following event log:

Events: Type Reason Age From Message

---------------------------------------------------------------------------------------------

Warning FailedScheduling 29s default-scheduler 0/4 nodes are available: 4 persistentvolumeclaim "index" not found.

Warning FailedScheduling 25s (x3 over 29s) default-scheduler 0/4 nodes are available: 4 pod has unbound immediate PersistentVolumeClaims.

Normal Scheduled 11s default-scheduler Successfully assigned office-demo-internal/documents-7c6c86466b-sqbmt to worker-3

Warning FailedMount 3s (x5 over 11s) kubelet, worker-3 MountVolume.SetUp failed for volume "demo-internal-index" : rpc error: code = Internal desc = mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t ext4 -o bind,_netdev /var/lib/kubelet/plugins/kubernetes.io/csi/pv/demo-internal-index/globalmount/demo-internal /var/lib/kubelet/pods/af2f33e0-06da-4429-9f75-908981cb85c3/volumes/kubernetes.io~csi/demo-internal-index/mount

Output: mount: /var/lib/kubelet/pods/af2f33e0-06da-4429-9f75-9034535485c3/volumes/kubernetes.io~csi/demo-internal-index/mount: special device /var/lib/kubelet/plugins/kubernetes.io/csi/pv/demo-internal-index/globalmount/demo-internal does not exist. The csi-plugin logs messages like:

csi-rbdplugin Mounting command: mount

csi-rbdplugin Mounting arguments: -t ext4 -o bind,_netdev /var/lib/kubelet/plugins/kubernetes.io/csi/pv/demo-internal-index/globalmount/demo-internal-imixs /var/lib/kubelet/pods/af2f33e0-34535-4429-

9f75-908981cb85c3/volumes/kubernetes.io~csi/demo-internal-index/mount

csi-rbdplugin Output: mount: /var/lib/kubelet/pods/af2f33e0-06da-4429-35445-908981cb85c3/volumes/kubernetes.io~csi/demo-internal-index/mount: special device /var/lib/kubelet/plugins/kubernetes.io/cs

i/pv/demo-internal-index/globalmount/demo-internal does not exist.

csi-rbdplugin E0613 15:56:55.814449 32379 utils.go:136] ID: 33 Req-ID: demo-internal-imixs GRPC error: rpc error: code = Internal desc = mount failed: exit status 32

csi-rbdplugin Mounting command: mount

csi-rbdplugin Mounting arguments: -t ext4 -o bind,_netdev /var/lib/kubelet/plugins/kubernetes.io/csi/pv/demo-internal-index/globalmount/demo-internal /var/lib/kubelet/pods/af2f33e0-06da-5552-

9f75-908981cb85c3/volumes/kubernetes.io~csi/demo-internal-index/mount

csi-rbdplugin Output: mount: /var/lib/kubelet/pods/af2f33e0-06da-4429-9f75-908981cb85c3/volumes/kubernetes.io~csi/demo-internal-index/mount: special device /var/lib/kubelet/plugins/kubernetes.io/cs

i/pv/demo-internal-index/globalmount/demo-internal does not exist. After investigating many hours, I figured out that on the corresponding worker node there was something wrong with the corresponding PV directory

/var/lib/kubelet/plugins/kubernetes.io/csi/pv/demo-internal-index/After deleting this directory on the worker node, everything works again. See also the discussion here.

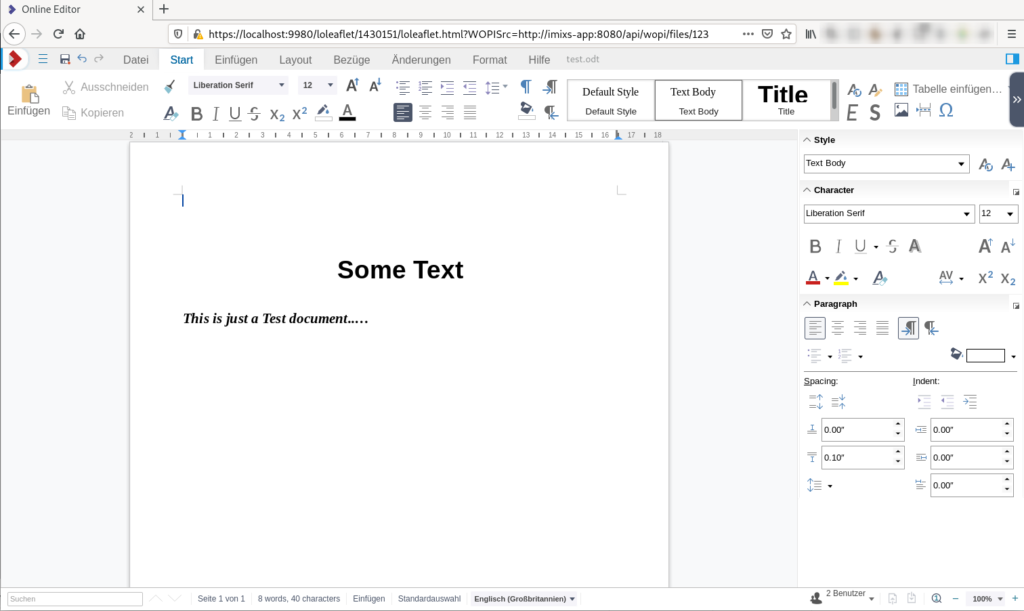

LibreOffice Online – How to Integrate into your Web Application

In this Blog Post I will explain how you can integrate the LibreOffice Online Editor into your Web Application.

In my example I will use a very simple approach just to demonstrate how thinks are working. I will not show how you integrate the editor with a iFrame into your web application because I assume that if you plan to integrate LibreOffice Online into your own application you are familiar with all the web development stuff.

So let’s started….

Continue reading “LibreOffice Online – How to Integrate into your Web Application”Running CockroachDB on Kubernetes

In my last blog I explained how to run the CockroachDB in a local dev environment with the help from docker-compose. Now I want to show how to setup a CockroachDB cluster in Kubernetes.

The CockroachDB is a distributed SQL database with a build in replication mechanism. This means that the data is replicated over several nodes in a database cluster. This increases the scalability and resilience in the case that a single node fails. With its Automated-Repair feature the database also detects data inconsistency and automatically fixes faulty data on disks. The project is Open Source and hosted on Github.

Supporting the PostgreSQL wire protocol, CockroachDB can be used out of the box for the Java Enterprise Applications and Microservices using the standard PostgresSQL JDBC driver.

Note: CockroachDB does not support the isolation level of transactions required for complex business logic. For that reason the Imixs-Workflow project does NOT recommend the usage of CockroachDB. See also the discussion here.

Continue reading “Running CockroachDB on Kubernetes”CockroachDB – an Alternative to PostgreSQL

The project CockroachDB offers a completely new kind of database. The CockroachDB is a distributed database optimized for container based environments like Kubernetes. The database is Open Source and hosted on Github. CoackroachDB implements the standard PostgreSQL API so it should work with Java Persistence API (JPA). But the CockroachDB does not fully support transaction API with the same isolation level as a PostgreSQL DB. Transactions are important for Java Enterprise applications in combination with JPA – so it may work but it is not as easy as it should be.

Anyway, CockroachDB has a build in replica mechanism. This allows to replicate the data over several nodes in your cluster. With is Automated-Repair feature the database detects data inconsistency on read and write and automatically and fixes faulty data.

So it seems worth to me to test it in combination with Imixs-Workflow which we typically run with PostgreSQL. In the following I will show how to setup the database with docker-compose and run it together with the Imixs-Process-Manager.

Docker-Compose

To run a test environment with Imixs-Workflow I use docker-compose to setup 3 database nodes and one instance of a Wildfly Application server running the Imixs-Process-Manager.

version: "3.6"

services:

db-management:

# this instance exposes the management UI and other instances use it to join the cluster

image: cockroachdb/cockroach:v20.1.0

command: start --insecure --advertise-addr=db-management

volumes:

- /cockroach/cockroach-data

expose:

- "8080"

- "26257"

ports:

- "26257:26257"

- "8180:8080"

healthcheck:

test: ["CMD", "/cockroach/cockroach", "node", "status", "--insecure"]

interval: 5s

timeout: 5s

retries: 5

db-node-1:

image: cockroachdb/cockroach:v20.1.0

command: start --insecure --join=db-management --advertise-addr=db-node-1

volumes:

- /cockroach/cockroach-data

depends_on:

- db-management

db-node-2:

image: cockroachdb/cockroach:v20.1.0

command: start --insecure --join=db-management --advertise-addr=db-node-2

volumes:

- /cockroach/cockroach-data

depends_on:

- db-management

db-init:

image: cockroachdb/cockroach:v20.1.0

volumes:

- ./scripts/init-cockroachdb.sh:/init.sh

entrypoint: "/bin/bash"

command: "/init.sh"

depends_on:

- db-management

imixs-app:

image: imixs/imixs-process-manager

environment:

TZ: "CET"

LANG: "en_US.UTF-8"

JAVA_OPTS: "-Dnashorn.args=--no-deprecation-warning"

POSTGRES_CONNECTION: "jdbc:postgresql://db-management:26257/workflow-db"

POSTGRES_USER: "root"

POSTGRES_PASSWORD: "dummypassword"

ports:

- "8080:8080"

- "8787:8787"You can start the environment with

$ docker-compose upThe root user is created by default for each cluster which is running in the ‘insecure’ mode. The root user is assigned to the admin role and has all privileges across the cluster. To connect to the database using the PostgreSQL JDBC driver, the user root with a dummy password can be provided. Note: this is for test and development only. For production mode you need to start the cluster is the ‘secure mode’. See details here.

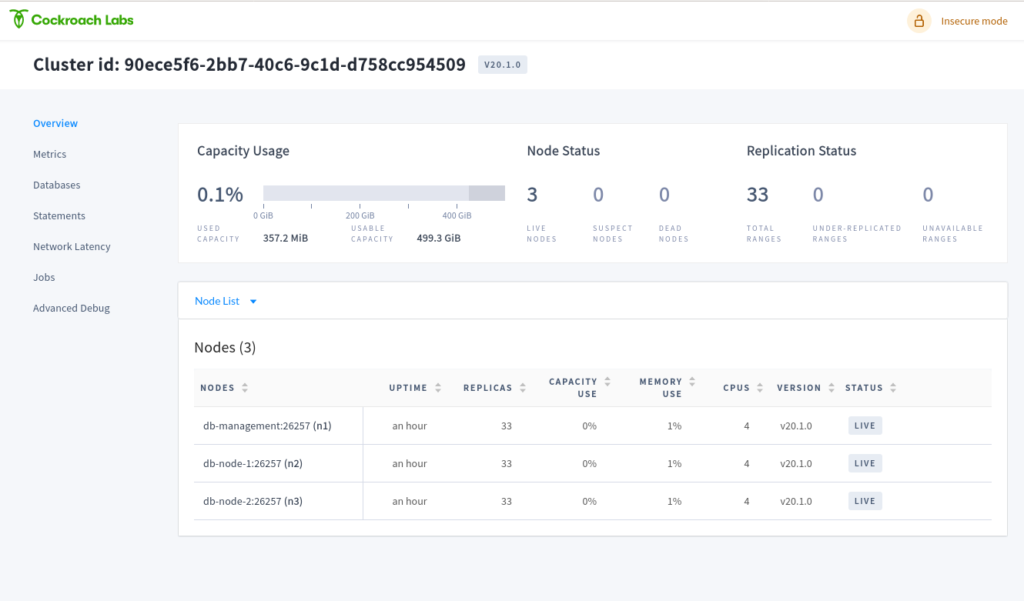

The Web UI

CockroachDB comes with a impressive Web UI which I expose on port 8180. So you can access the Web UI form your browser:

http://localhost:8180/

Create a Database

The Web UI has no interface to create users or databases. So we need to do this using the PostgreSQL command line syntax. For that open a bash in one of the 3 database nodes

$ docker exec -it imixsprocessmanager_db-management_1 bashWithin the bash you can enter the SQL shell with:

$ cockroach sql --insecure

#

# Welcome to the CockroachDB SQL shell.

# All statements must be terminated by a semicolon.

# To exit, type: \q.

#

# Server version: CockroachDB CCL v20.1.0 (x86_64-unknown-linux-gnu, built 2020/05/05 00:07:18, go1.13.9) (same version as client)

# Cluster ID: 90ece5f6-2bb7-40c6-9c1d-d758cc954509

#

# Enter \? for a brief introduction.

#

root@:26257/defaultdb>now you can create an empty database for the Imixs-Workflow system:

> CREATE DATABASE "workflow-db";That’s it! When you restart you deployment, the Imixs-Workflow engine successfully connects to CoackroachDB using the PSQL JDBC Driver. In the future I will provide some additional posts about running CockRoach in a Kubernetes Cluster based on the Open Source environment Imixs-Cloud.

NOTE: Further testing shows that the weak isolation level support of ACID transactions in CockroachDB makes it risky to run it in more complex situation. For that reason the Imixs-Workflow project does NOT recommend the usage of CockroachDB. See also the discussion here.

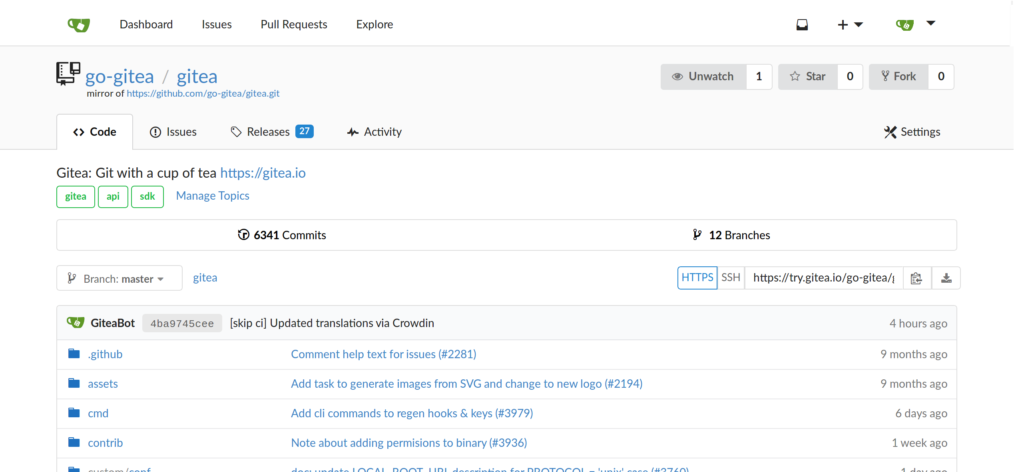

Running Gitea on a Virtual Cloud Server

Gitea is an open source, self hosted git repository with a powerful web UI. In the following short tutorial I will explain (and remember myself) how to setup Gitea on single virtual cloud server. In one of my last posts I showed how to run Gitea in a Kubernetes cluster. As I am using git also to store my kubernetes environment configuration it’s better to run the git repo outside of a cluster. In the following tutorial I will show how to setup a single cloud node running Gitea on Docker.

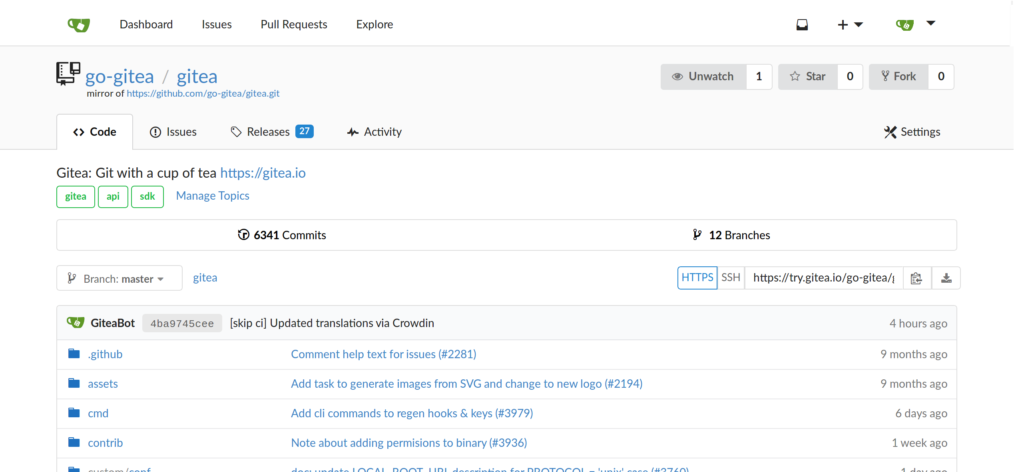

Running Gitea on Kubernetes

Gitea is an open source, self hosted git repository with a powerful web UI. In the following short tutorial I will explain (and remember myself) how to setup Gitea on self managed Kubernetes cluster. If you do not yet have a Kubernetes cluster take a look to the Imixs-Cloud project.

NFS and Iptables

These days I installed a NFS Server to backup my Kubernetes Cluster. Even as I protected the NSF server via the exports file to allow only cluster members to access the server there was still a new security risk. NSF comes together with the Remote Procedure Call Daemon (RPC). And this daemon enables attackers to figure out information about your network. So it is a good idea to protect the RPC which is running on port 111 from abuse.

To test if your server has an open rpc port you can run a telnet from a remote node:

$ telnet myserver.foo.com 111

Trying xxx.xxx.xxx.xxx...

Connected to myserver.foo.com.This indicates that rpc is visible from the internet. You can check the rpc ports on your server also with:

$ rpcinfo -p

program vers proto port service

100000 4 tcp 111 portmapper

100000 3 tcp 111 portmapper

100000 2 tcp 111 portmapper

100000 4 udp 111 portmapper

100000 3 udp 111 portmapper

100000 2 udp 111 portmapperIptables

If you run Kubernetes or Docker on a sever you usually have already Iptables installed. You can test this by listing existing firewall rules. With the option -L you can list all existing rules:

$ iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain FORWARD (policy DROP)

target prot opt source destination

DOCKER-USER all -- anywhere anywhere

DOCKER-ISOLATION-STAGE-1 all -- anywhere anywhere

ACCEPT all -- anywhere anywhere ctstate RELATED,ESTABLISHED

DOCKER all -- anywhere anywhere

ACCEPT all -- anywhere anywhere

ACCEPT all -- anywhere anywhere

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain DOCKER (1 references)

target prot opt source destination

ACCEPT tcp -- anywhere 172.17.0.2 tcp dpt:9042

ACCEPT tcp -- anywhere 172.17.0.2 tcp dpt:afs3-callback

Chain DOCKER-ISOLATION-STAGE-1 (1 references)

target prot opt source destination

DOCKER-ISOLATION-STAGE-2 all -- anywhere anywhere

RETURN all -- anywhere anywhere

Chain DOCKER-ISOLATION-STAGE-2 (1 references)

target prot opt source destination

DROP all -- anywhere anywhere

RETURN all -- anywhere anywhere

Chain DOCKER-USER (1 references)

target prot opt source destination

RETURN all -- anywhere anywhereThis is an typical example you will see on a sever with Docker daemon installed. Beside the three default chains ‘INPUT’, ‘FORWARD’ and ‘OUTPUT’ there are also some custom Docker chains describing the rules.

So the goal is to add a new CHAIN containing rules to protect the RPC daemon from abuse.

Backup your Origin iptables

Before you start adding new rules make a backup of your origin rule set:

$ iptables-save > iptables-backupThis file can help you if something goes wrong later…

Adding a RPC Rule

If you want to use RPC in the internal network but prohibit it from the outside, then you can implement the following iptables. In this example I explicitly name the cluster nodes which should be allowed to use RPC port 11. All other request to the PRC port will be dropped.

Replace [SEVER-NODE-IP] with the IP address from your cluster node:

$ iptables -A INPUT -s [SERVER-NODE-IP] -p tcp --dport 111 -j ACCEPT

$ iptables -A INPUT -s [SERVER-NODE-IP] -p udp --dport 111 -j ACCEPT

$ iptables -A INPUT -p tcp --dport 111 -j DROP

$ iptables -A INPUT -p udp --dport 111 -j DROPThis rule explicitly allows SEVER-NODE-IP to access the service and all other clients will be drop. You can easily add additional Nodes before the DROP.

You can verify if the new ruleset was added to your existing rules with:

$ iptables -LYou may write a small bash script with all the iptables commands. This makes it more convenient testing your new ruleset.

Saving the Ruleset

Inserting new rules into the firewall carries some risk by its own. If you do something wrong you can lockout your self from your sever. For example if you block the SSH port 22.

The good thing is, that rules created with the iptables command are stored in memory. If the system is restarted before saving the iptables rule set, all rules are lost. So in the worst case you can reboot your server to reset your new rules.

If you have tested your rules than you can persist the new ruleset.

$ iptables-save > iptables-newrulesetAfter a reboot yor new rules will still be ignored. To tell debian to use the new ruleset you have to store this ruleset into /etc/iptables/rules.v4

$ iptables-save > /etc/iptables/rules.v4Finally you can restart your server. The new rpc-rules will be applied during boot.

Migrating to Jakarta EE 9

In this blog post I will document the way, we at Imixs-Workflow migrated from Java EE to Jakarta EE 9. The Java Enterprise Stack has always been known for providing a very reliable and stable platform for developers. We at Imixs started with Java EE in the early beginnings in the year 2003. At that time Java EE was not comparable to the platform we know today. For me the most impressive part of the journey with Java EE over the last 17 years was the fact, that you can always trust on the platform. Even if new concepts and features where introduced, your existing code worked. For a human-centric workflow engine, like our open source project Imixs-Workflow, this is an important aspect. A workflow engine have to be sustainable. A long running business process my take years from its creation to its final state. An insurance process is one example of this kind of a business process. I personally run customer projects, started running Imixs-Workflow on Glassfish, switched to JBoss, migrated to Payara and run today on Wildfly. Upgrading the Java EE version and switching the server platform was never something special about which you had to write a lot. But with Jakarta EE9 the situation changed dramatically.

Continue reading “Migrating to Jakarta EE 9”Monitoring Your Kubernetes Cluster the Right Way

Monitoring a Kubernetes cluster seems not to be so difficult as you look at the hundreds of blogs and tutorials. But there is a problem – it is the dynamic and rapid development of Kubernetes. And so you will find many blog posts describing a setup that may not work properly for your environment anymore. This is not because the author has provided a bad tutorial, but only because the article is maybe older than one year. Many things have changed in Kubernetes and it is the area of metrics and monitoring that is affected often.

For example, you will find many articles describing how to setup the cadvisor service to get container metrics. But this technology has become part of kubelet in the meantime so an additional installation should not be necessary anymore and can lead to incorrect metrics in the worst case. Also the many Grafana boards to display metrics have also evolved. Older boards are usually no longer suitable to be used in a new Kubernetes environment.

Therefore in this tutorial, I would like to show how to set up a monitoring correctly in the current version of Kubernetes 1.19.3. And of course also this blog post will be outdated after some time. So be warned 😉

Continue reading “Monitoring Your Kubernetes Cluster the Right Way”